Overview

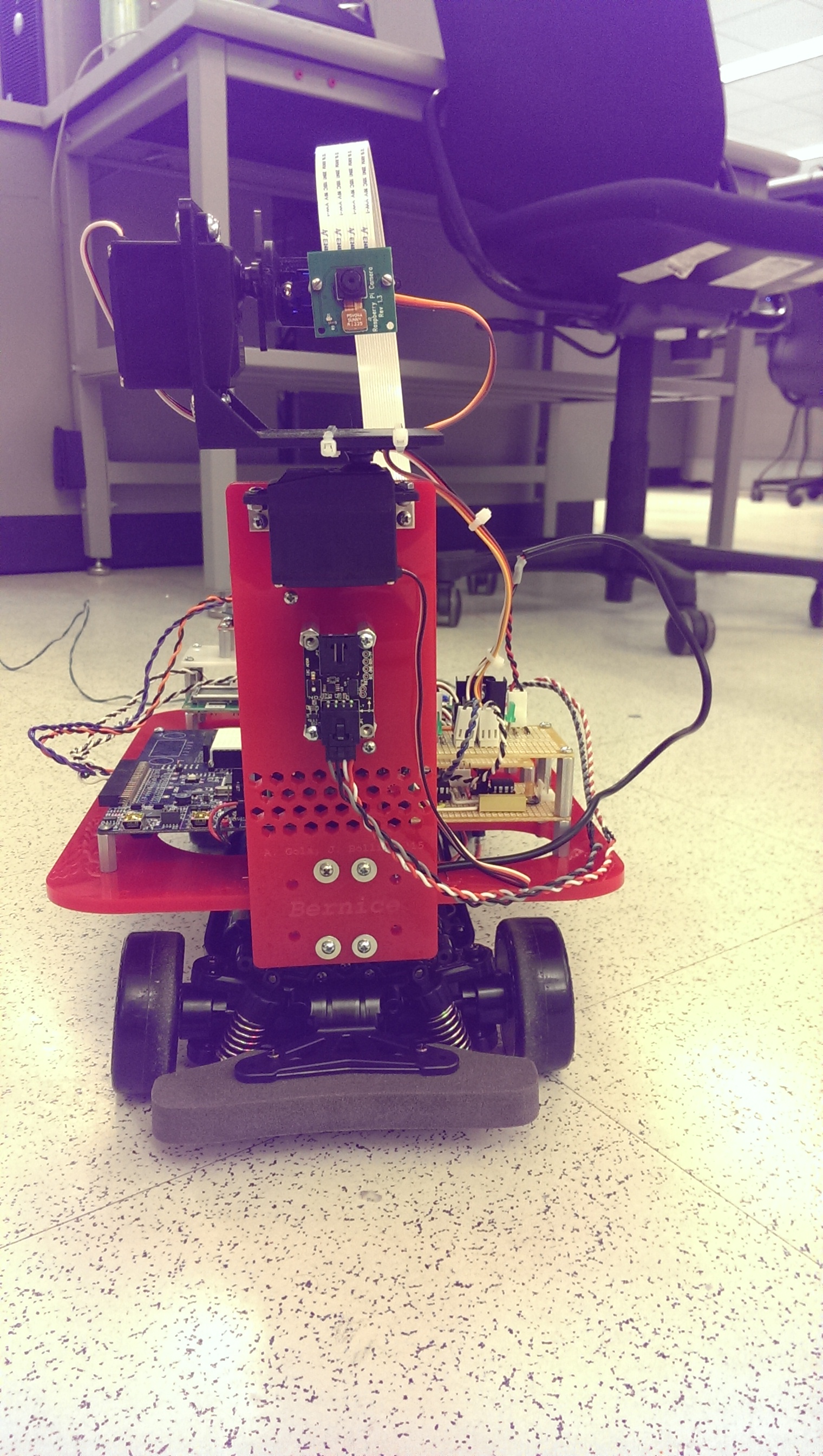

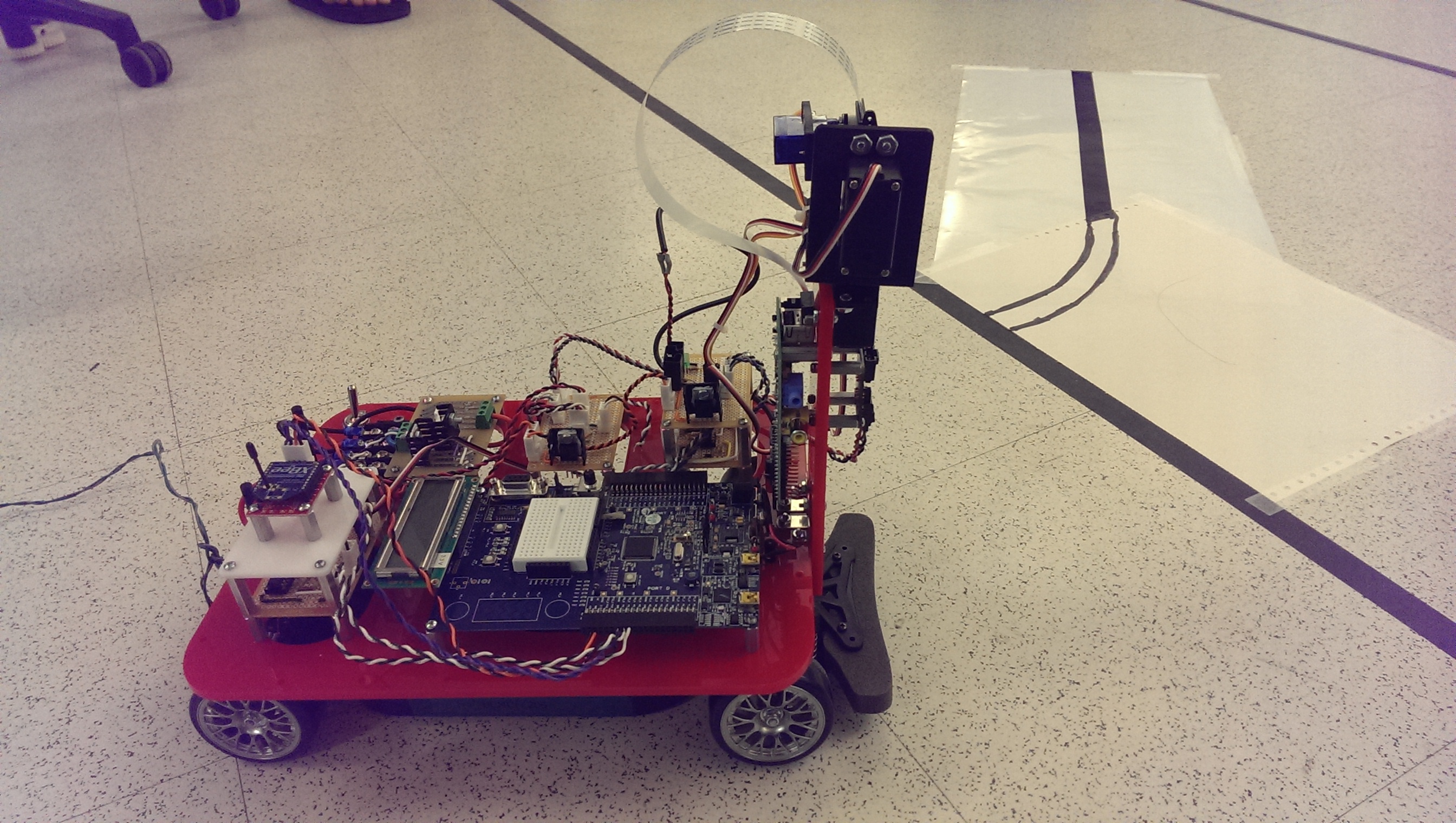

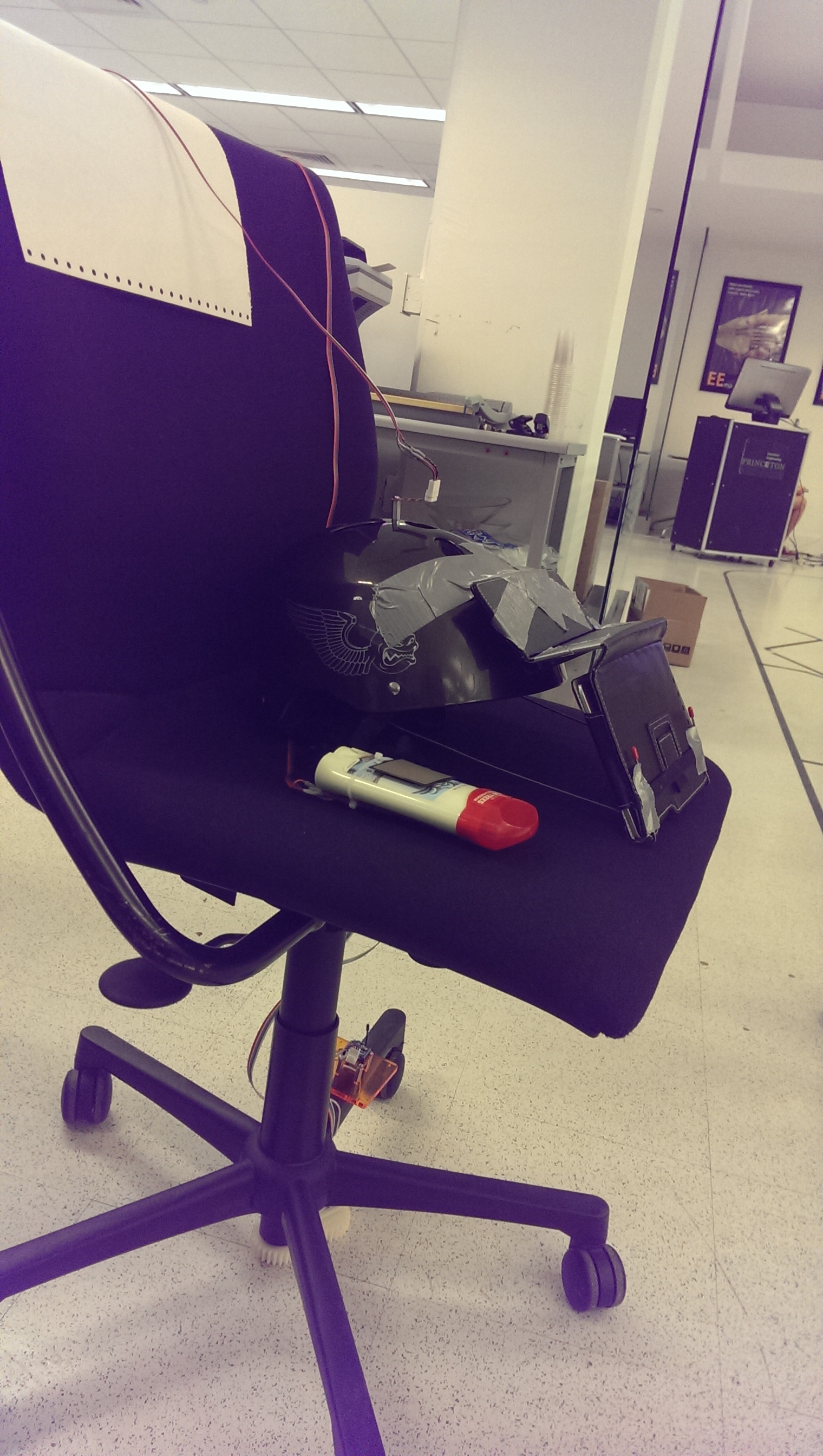

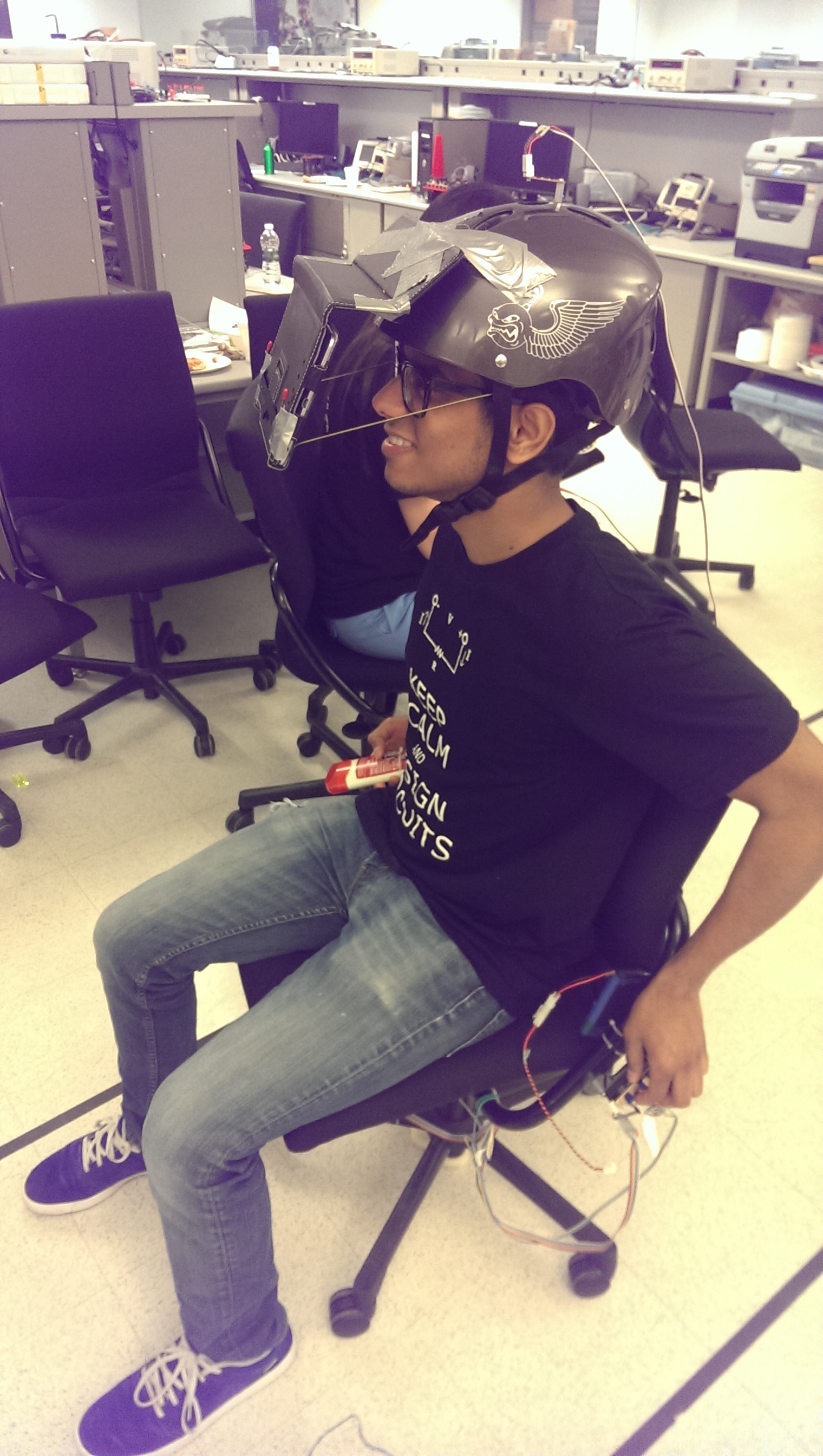

We designed and implemented a telepresence station to control a (model) car. The station was mounted on a swivel chair and was equipped with a helmet which would track head orientation and provide visual feedback, an encoder that would transate swivel rotation into steering, and a handheld controller that would allow the user to control speed and provide haptic (vibrational) feedback. The station was powered by an Arduino Mega, which contained the software to connect and run all of our user-mounted sensors. The car was powered by a Cypress Programmable System on a Chip (PSoC). The car and chair communicated with eachother using a pair of XBee modules. The other main tools we used to implement this project were a 9DOF inertial measurement unit (IMU) for head tracking, accelerometer and vibration motor for haptic feedback, a pressure sensor for throttle control, rotary encoder for steering, a Raspberry Pi (with camera) for video streaming, and three servos for camera control.

Unfortunately, I cannot post the code and final paper online since current ELE 302 students might see our implementation details. However, please feel free to email me if you would like to see the final paper/code!

People

Ankush Gola, Joseph Bolling

Media

Video

Sorry for sounding so dreary in the video, I must have been pretty tired at the time...

Pictures